PI-QT-Opt: Predictive Information Improves Multi-Task Robotic Reinforcement Learning at Scale CoRL 2022

Abstract

The predictive information, the mutual information between the past and future, has been shown to be a useful representation learning auxiliary loss for training reinforcement learning agents, as the ability to model what will happen next is critical to success on many control tasks. While existing studies are largely restricted to training specialist agents on single-task settings in simulation, in this work, we study modeling the predictive information for robotic agents and its importance for general-purpose agents that are trained to master a large repertoire of diverse skills from large amounts of data. Specifically, we introduce Predictive Information QT-Opt (PI-QT-Opt), a QT-Opt agent augmented with an auxiliary loss that learns representations of the predictive information to solve up to 297 vision-based robot manipulation tasks in simulation and the real world with a single set of parameters. We demonstrate that modeling the predictive information significantly improves success rates on the training tasks and leads to better zeroshot transfer to unseen novel tasks. Finally, we evaluate PI-QT-Opt on real robots, achieving substantial and consistent improvement over QT-Opt in multiple experimental settings of varying environments, skills, and multi-task configurations.

Approach

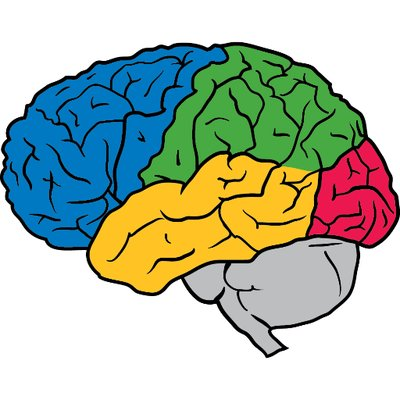

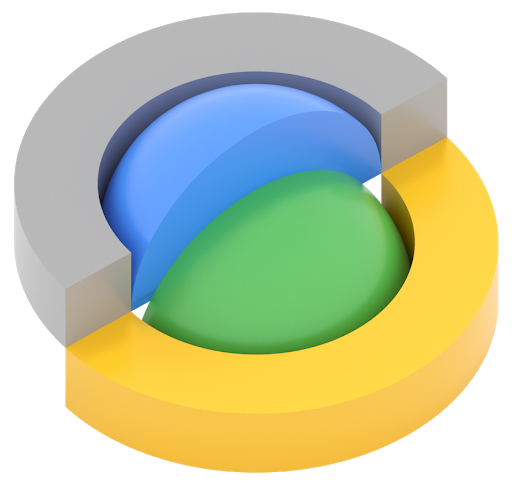

PI-QT-Opt combines a predictive information auxiliary similar to that introduced in PI-SAC with the QT-Opt architecture. We define the past (X) to be the current state and action, (s, a), and the future (Y) to be the next state, next optimal action, and reward, (s_0, a0, r). A state s includes an RGB image observation and proprioceptive information. Image observations are processed by a simple conv net, the output of which is mixed with action, proprioceptive state, and the current task context using additive conditioning. A second simple conv net processes the combined state representation. All of the convolutional parameters are shared by both the forward encoder e_θ for modeling the predictive information and the Q-function Q_θ, but the shared representation output from this is further processed by separate MLPs, to allow each loss to specialize its representation as needed, while still allowing the predictive information loss to influence the shared convolutional representation. Not shown in Figure 1 is that the target Q-function and backward encoder for modeling the predictive information also share the same base lagged and non-trainable convolutional representation, but the backward encoder has its own trainable MLP, in order to learn any differences in dynamics when trying to predict the past from the future, rather than predicting the future from the past, as the forward encoder does. In addition, we concatenate the convolutional representation with observed reward r(s, a) as the input to the backward encoder MLP head.

We find that adding a predictive information auxiliary loss is an easy way to give substantial performance improvements to our chosen RL algorithm, as in Lee et al. which introduced Predictive Information Soft Actor-Critic (PI-SAC). However, we note that PI-SAC on its own was unable to solve our tasks, yielding close-to-zero success rates, and neither was SAC, which may indicate that the choice of base RL algorithm is still critical.

In order to learn one general-purpose agent for multiple tasks, we condition the Q-functions and the Predictive Information auxiliary on a task context, which describes the specific task that we wish the agent to perform. In our setting, a task involves a robot skill and a set of objects that the robot should interact with. We use two practical implementations of task context in different robot manipulation settings. One is image-based, where a task is specified with the initial image, the initial object locations, and the skill type. It only considers locations and skill types and thus could enable good generalization across different and even novel objects. The other one is language-based, where tasks are specified with natural language, similar to BC-Z.

Results

To analyze how PI-QT-Opt compares with QT-Opt across different multi-task robotic learning scenarios, we explore a variety of challenging simulation and real vision-based robotic manipulation environments. While prior results on large-scale robotic grasping focused on a limited set of tasks, we verify the robustness and scalability of PI-QT-Opt by studying many different environments across hundreds of different tasks in the real world. Specifically, we study 6 different multi-task, vision-based robotic manipulation settings in 3 different environments in simulation. Four of the manipulation settings have the corresponding hardware setup permitting real-world evaluation.

We find that PI-Qt-Opt is consistently stronger in all domains compared to a baseline QT-Opt method, including in challenging real world evaluation scenarios.

A core hypothesis of this work is that the ability to model what will happen next is critical to success on control tasks. This ability can be quantified by the amount of predictive information, I(X, Y ), the agent’s representation captures. We analyze the SayCan 300-task PI-QT-Opt model to compare the estimates of I(X, Y) versus TD-error for both successful and failed episodes. We can observe that the amount of predictive information is generally higher in successful episodes, and that episodes with high TD-errors have much lower predictive information and are always failures.

Citation

Acknowledgements

The authors would like to thank Alex Herzog, Mohi Khansari, Daniel Kappler, Peter Pastor for adapting infrastructure and algorithms for the image-based task context from generic to instance specific grasping, Sangeetha Ramesh for leading robot operations for data collection and evaluations for the VILD model training, and Kim Kleiven for leading the waste sorting service project that constitutes the framework for training and deployment of the instance grasping task set, including defining benchmark and protocol. We thank Jornell Quiambao, Grecia Salazar, Jodilyn Peralta, Justice Carbajal, Clayton Tan, Huong T Tran, Emily Perez, Brianna Zitkovich and Jaspiar Singh for helping administrate real-world robot experiments. We would also like to thank Sergio Guadarrama and Karol Hausman for valuable feedback.

The website template was borrowed from Jon Barron.

Google Brain

Google Brain Robotics at Google

Robotics at Google Everyday Robots

Everyday Robots